yolort 直觉¶

[1]:

import cv2

import torch

from yolort.utils import Visualizer, get_image_from_url, read_image_to_tensor

from yolort.v5.utils.downloads import safe_download

[2]:

import os

os.environ["CUDA_DEVICE_ORDER"]="PCI_BUS_ID"

os.environ["CUDA_VISIBLE_DEVICES"]="0"

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

读取图片¶

请求并预处理要检测的图像。

[3]:

img_source = "https://huggingface.co/spaces/zhiqwang/assets/resolve/main/bus.jpg"

# img_source = "https://huggingface.co/spaces/zhiqwang/assets/resolve/main/zidane.jpg"

img_raw = get_image_from_url(img_source)

img = read_image_to_tensor(img_raw)

img = img.to(device)

images = [img]

模型定义和初始化¶

[4]:

score_thresh = 0.55

stride = 64

img_h, img_w = 640, 640

[5]:

from yolort.models import yolov5n6

[6]:

model = yolov5n6(pretrained=True, score_thresh=score_thresh, size=(img_h, img_w))

Downloading: "https://github.com/zhiqwang/yolov5-rt-stack/releases/download/v0.5.2-alpha/yolov5_darknet_pan_n6_r60_coco-4e823e0f.pt" to /home/pc/.cache/torch/hub/checkpoints/yolov5_darknet_pan_n6_r60_coco-4e823e0f.pt

[7]:

model = model.eval()

model = model.to(device)

[8]:

# Perform inference on an image tensor

model_out = model(images)

/media/pc/data/4tb/lxw/anaconda3/envs/torch/lib/python3.10/site-packages/torch/functional.py:568: UserWarning: torch.meshgrid: in an upcoming release, it will be required to pass the indexing argument. (Triggered internally at /opt/conda/conda-bld/pytorch_1646755897462/work/aten/src/ATen/native/TensorShape.cpp:2228.)

return _VF.meshgrid(tensors, **kwargs) # type: ignore[attr-defined]

验证 PyTorch 后端推理结果¶

[9]:

model_out[0]['boxes'].cpu().detach()

[9]:

tensor([[ 32.27846, 225.15266, 811.47729, 740.91071],

[ 50.42179, 387.48911, 241.54399, 897.61035],

[219.03331, 386.14352, 345.77689, 869.02582],

[678.05023, 374.65326, 809.80341, 874.80621]])

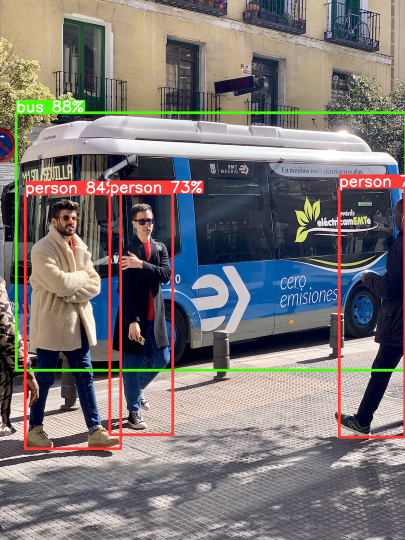

(yolov5n6,形状为 1080x810)的目标输出:

tensor([[ 32.27846, 225.15259, 811.47729, 740.91077],

[ 50.42178, 387.48911, 241.54393, 897.61035],

[219.03334, 386.14346, 345.77686, 869.02582],

[678.05023, 374.65341, 809.80341, 874.80621]])

[10]:

model_out[0]['scores'].cpu().detach()

[10]:

tensor([0.88238, 0.84486, 0.72629, 0.70077])

(yolov5n6,形状为 1080x810)的目标得分:

tensor([0.88238, 0.84486, 0.72629, 0.70077])

[11]:

model_out[0]['labels'].cpu().detach()

[11]:

tensor([5, 0, 0, 0])

目标输出的标签:

tensor([5, 0, 0, 0])

检测输出可视化¶

首先,获取 COCO 数据集的标签。

[12]:

# label_path = "https://raw.githubusercontent.com/zhiqwang/yolov5-rt-stack/main/notebooks/assets/coco.names"

label_source = "https://huggingface.co/spaces/zhiqwang/assets/resolve/main/coco.names"

label_path = label_source.split("/")[-1]

safe_download(label_path, label_source)

Downloading https://huggingface.co/spaces/zhiqwang/assets/resolve/main/coco.names to coco.names...

[13]:

v = Visualizer(img_raw, metalabels=label_path)

v.draw_instance_predictions(model_out[0])

v.imshow(scale=0.5)

脚本化 YOLOv5¶

[14]:

# TorchScript export

print(f'Starting TorchScript export with torch {torch.__version__}...')

export_script_name = 'yolov5n6.torchscript.pt' # filename

Starting TorchScript export with torch 1.11.0...

[15]:

model_script = torch.jit.script(model)

model_script.eval()

model_script = model_script.to(device)

[16]:

# 保存脚本模型文件以供后续使用(可选)

model_script.save(export_script_name)

对 TorchScript 后端的推理¶

[17]:

out = model(images)

out_script = model_script(images)

/media/pc/data/4tb/lxw/anaconda3/envs/torch/lib/python3.10/site-packages/yolort/models/yolo.py:179: UserWarning: YOLO always returns a (Losses, Detections) tuple in scripting.

warnings.warn("YOLO always returns a (Losses, Detections) tuple in scripting.")

/media/pc/data/4tb/lxw/anaconda3/envs/torch/lib/python3.10/site-packages/yolort/models/yolov5.py:180: UserWarning: YOLOv5 always returns a (Losses, Detections) tuple in scripting.

warnings.warn("YOLOv5 always returns a (Losses, Detections) tuple in scripting.")

在 LibTorch 后端验证推理输出¶

[18]:

for k, v in out[0].items():

torch.testing.assert_allclose(out_script[1][0][k], v, rtol=1e-07, atol=1e-09)

print("Exported model has been tested with libtorch, and the result looks good!")

Exported model has been tested with libtorch, and the result looks good!

比较 pytorch 和 libtorch 的推理时间¶

PyTorch 后端消耗的时间

[19]:

%%timeit

with torch.no_grad():

out = model(images)

17.1 ms ± 54.2 µs per loop (mean ± std. dev. of 7 runs, 100 loops each)

LibTorch 后端消耗的时间

[20]:

# Dummy run once for torchscript

with torch.no_grad():

out_script = model_script(images)

[21]:

%%timeit

with torch.no_grad():

out_script = model_script(images)

11.3 ms ± 430 µs per loop (mean ± std. dev. of 7 runs, 1 loop each)

View this document as a notebook: https://github.com/zhiqwang/yolov5-rt-stack/blob/main/notebooks/inference-pytorch-export-libtorch.ipynb